Schur Orthogonality

If $G$ is nonabelian, the group of linear characters is too small to be an orthonormal basis of $L^2 (G)$. Still the problem of giving an orthonormal basis of $L^2 (G)$ has a satisfactory solution.

If $V$ is a complex vector space, the ring $\operatorname{End} (V)$ of vector space endomorphisms of $V$ is a ring. This ring has as its multiplicative group the group $\operatorname{GL} (V)$ of invertible linear transformations. Assuming that $V$ has finite dimension $d$, $\operatorname{End} (V) \cong \operatorname{Mat}_d (\mathbb{C})$ and $\operatorname{GL} (V) \cong \operatorname{GL} (d, \mathbb{C})$.

By a (complex) representation of $G$ we mean an ordered pair $(\pi, V)$, where $V$ is a complex vector space and $\pi : G \longrightarrow \operatorname{GL} (V)$ is a homomorphism; or we say that $\pi : G \longrightarrow \operatorname{GL} (V)$ is a representation. For finite groups, we may limit ourselves to the case where $V$ is finite-dimensional, and indeed when we say that $(\pi, V)$ is a representation, we will always assume that $V$ is finite-dimensional.

If $V$ is a vector space over a field $F$, a linear functional is a linear map $V \longrightarrow F$. The linear functionals themselves form a vector space $V^{\ast}$, the dual space of $V$. If $V$ is finite dimensional, then the dual space has the same complex dimension; indeed, if $x_1, \cdots, x_D$ are a basis of $V$ there is a dual basis $\lambda_1, \cdots, \lambda_D$ of $V^{\ast}$ such that $\lambda_i (x_j) = 1$ if $i = j$, and $0$ if $i \neq j$. The situation is quite analogous to that of the dual group, already discussed: $V \cong V^{\ast}$ but not canonically. However $V \cong (V^{\ast})^{\ast}$ and this isomorphism is natural.

The dual space is a contravariant functor: if $\phi : V \longrightarrow W$ is any linear transformation, then composition with $\phi$ gives a linear transformation $\phi^{\ast} : W^{\ast} \longrightarrow V^{\ast}$. Thus if $\Lambda : W \longrightarrow F$ is any linear functional, then $\phi^{\ast} (\Lambda) \in V^{\ast}$ is the linear functional $\Lambda \circ \phi : V \longrightarrow F$. We will mainly be concerned with the case where $F =\mathbb{C}$.

Exercise 2.3.1: Show that if $T : V \longrightarrow V$ is a linear transformation, and if $M$ is the matrix of $T$ with respect to some basis $v_1, \cdots, v_D$, then the matrix of $T^{\ast} : V^{\ast} \longrightarrow V^{\ast}$ with respect to the dual basis $\lambda_1, \cdots, \lambda_D$ is the transpose of $M$. Thus if $M = (m_{i j})$ we have $T (v_i) = \sum_j m_{j i} v_j$, and you must prove that $T^{\ast} (\lambda_i) = \sum_j m_{i j} \lambda_j$.

Now let $(\pi, V)$ be a representation. We then have another representation $( \hat{\pi}, V^{\ast})$ of $G$ on the dual space, defined by $\hat{\pi} (g) = \pi (g^{- 1})^{\ast}$. Note that we need the inverse to compensate for the fact that the dual is a contravariant functor, so that $\hat{\pi} (g h) = \hat{\pi} (g) \hat{\pi} (h)$. In concrete terms if $\Lambda \in V^{\ast}$ then $\hat{\pi} (g) \Lambda$ is the linear functional

| \[ ( \hat{\pi} (g) \Lambda) (v) = \Lambda (\pi (g)^{- 1} v) . \] | (2.3.1) |

Theorem 2.3.1: If $\pi : G \longrightarrow \operatorname{GL} (V)$ is a representation, then there exists an inner product on $V$ such that $\left\langle \pi (g) v, \pi (g) w \right\rangle = \left\langle v, w \right\rangle$ for all $g \in G$ and $v, w \in V$.

We can express the identity $\left\langle \pi (g) v, \pi (g) w \right\rangle = \left\langle v, w \right\rangle$ by saying that $\left\langle \;, \; \right\rangle$ is invariant under the action of $G$. Or we may say that $\pi (g)$ is unitary. Indeed, if $T : V \longrightarrow V$ is a linear transformation of a finite-dimensional Hilbert space $V$ such that $\left\langle T v, T w \right\rangle = \left\langle v, w \right\rangle$ for all $v, w \in V$, we say that $T$ is unitary. The unitary linear transformations form a group, called the unitary group $U (V)$. Unlike $\operatorname{GL} (V)$, it has the important topological property of being compact. The content of the theorem is that given any representation, an inner product can be chosen so that $\pi (G)$ is contained in the unitary group.

Proof. (Click to Expand/Collapse)

Now if $(\pi, V)$ is a representation and $U$ is a subspace of $V$, we say that $U$ is invariant if $\pi (g) U \subseteq U$ for all $g \in G$. The representation is called irreducible if $V \neq 0$, and if the only invariant subspaces are $0$ and $U$. Clearly every nonzero representation contains an irreducible subspace. If $V$ is not irreducible, and is nonzero, then we say $V$ is reducible, and this means it has a proper nonzero invariant subspace.

Theorem 2.3.2: (Maschke.) Let $(\pi, V)$ be a representation of the finite group $G$. Then $V$ is a direct sum of irreducible subspaces.

Proof. (Click to Expand/Collapse)

We recall that a matrix $A$ is called diagonalizable $M^{- 1} A M$ is diagonal for some invertible matrix $M$. We also say that a linear transformation is diagonalizable if and only if its matrix is diagonalizable with respect to any basis.

Lemma 2.3.1: If $A$ represents the linear transformation $T$ of the vector space $V$, then $A$ is diagonalizable if and only if $V$ has a basis of eigenvectors of $A$.

Proof. (Click to Expand/Collapse)

Proposition 2.3.1: Let $V$ be a finite-dimensional vector space and let $T : V \longrightarrow V$ be a linear transformation of finite order, so $T^n = I$ (the identity map) for some $n$. Then $V$ has a basis of eigenvectors for $T$. (So $T$ is diagonalizable.)

This can be proved using the Jordan canonical form, but we will prove it as a consequence of Maschke's theorem (Theorem 2.3.2).

Proof. (Click to Expand/Collapse)

We now introduce a ring called the group algebra, denoted $\mathbb{C}[G]$. As a vector space over $\mathbb{C}$ it is just the space of all functions on the group. It has a multiplication called convolution and denoted $\ast$ in which if $f_1, f_2 \in \mathbb{C}[G]$, then $f_1 \ast f_2$ is the function

| \[ (f_1 \ast f_2) (x) = \sum_{y \in G} f_1 (y) f_2 (y^{- 1} x) = \sum_{\begin{array}{c} y, z \in G\\ y z = x \end{array}} f_1 (y) f_2 (z) = \sum_{z \in G} f_1 (x z^{- 1}) f_2 (z) . \] | (2.3.2) |

Exercise 2.3.2: If $g \in G$ let $\delta_g$ be the function defined by $\delta_g (x) = 1$ if $x = g$, 0 otherwise. Show that $\delta_{g h} = \delta_g \ast \delta_h$ and conclude that $g \longmapsto \delta_g$ is an injective homomorphism of $G$ into $\mathbb{C}[G]^{\times}$.

If one makes use of this exercise, then one may identify $G$ with its image under the homomorphism $\delta$. This works for finite groups, or more generally discrete topological groups, but not in general. For example, there is no analog of $\delta_g$ in $C_c^{\infty} (\mathbb{R})$.

If $(\pi, V)$ is a representation, then we may define a multiplication $\mathbb{C}[G] \times V \longrightarrow V$ by \[ f \cdot v = \sum_{g \in G} f (g) \pi (g) v. \] Let us check that $V$ becomes a $\mathbb{C}[G]$-module with this multiplication. If $f_1, f_2$ are functions on $G$ then \[ f_1 \cdot (f_2 \cdot v) = f_1 \cdot \left( \sum_{z \in G} f_2 (z) \pi (z) v \right) = \sum_{y, z \in G} f_1 (y) f_2 (z) \pi (y z) v. \] Collecting the terms with $y z = g$, this may be written \[ \sum_{g \in G} \left( \sum_{y, z = g} f_1 (y) f_2 (z) \right) \pi (g) v = (f_1 \ast f_2) \cdot v. \]

Proposition 2.3.2: Let $V$ be a $ \mathbb{C}[G]$-module. Since $\mathbb{C} \subset \mathbb{C}[G]$, $V$ is a vector space over $\mathbb{C}$, and we assume it to be finite-dimensional. Then there exists a representation $(\pi, V)$ such that the $ \mathbb{C}[G]$-module structure on $V$ is the one determined by this representation.

Thus representations and modules over the group algebra are really the same thing. We will sometimes use the expression $G$-module to mean $\mathbb{C}[G]$-module.

Proof. (Click to Expand/Collapse)

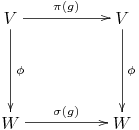

Now let $(\pi, V)$ and $(\sigma, W)$ be two representations. Then we call a linear map $\phi : V \longrightarrow W$ an intertwining operator if for all $g \in G$ the following diagram is commutative:

|

Exercise 2.3.3: Prove that $\phi : V \longrightarrow W$ is an intertwining operator if and only if it is a $\mathbb{C}[G]$-module homomorphism.

We will sometimes say that $\phi : V \longrightarrow W$ is a $G$-module homomorphism to mean that it is a $\mathbb{C}[G]$-module homomorphism; we see that this is the same as saying that it is an intertwining operator. We may also express the same thing by saying that the map is $G$-equivariant. If $R$ is a ring and $V$, $W$ are $R$-modules, the space of $R$-module homomorphisms $M \longrightarrow N$ will be denoted $\operatorname{Hom}_R (V, W)$; if $R =\mathbb{C}[G]$ we will also use the notation $\operatorname{Hom}_G (V, W)$ to mean the same thing.

The notion of irreducibility extends to an arbitrary ring $R$. If $R$ is a ring and $M$ is an $R$-module, then $M$ is called simple if it has no proper, nontrivial submodules. If $R =\mathbb{C}[G]$, then simple and irreducible are synonymous terms.

Proposition 2.3.3: (Schur's Lemma.) (i) Let $R$ be a ring and let $M, N$ be simple $R$-modules. Let $\phi \in \operatorname{Hom}_R (M, N)$. Then $\phi$ is either zero or an isomorphism.

(ii) Let $R =\mathbb{C}[G]$ and let $V$ be an irreducible $G$-module that is finite-dimensional as a complex vector space. Let $\phi \in \operatorname{End}_R (V)$. Then there exists a complex number $\lambda \in \mathbb{C}$ such that $\phi (v) = \lambda v$ for all $v \in V$.

Proof. (Click to Expand/Collapse)

For (ii), since $\phi$ is a linear transformation of a complex vector space it has an eigenvalue $\lambda$. Thus $U =\{v \in V| \phi (v) = \lambda v\}$ is nonzero. It is a submodule since if $f \in R$ and $v \in U$ then $\phi (f v) = f \phi (v) = \lambda f v$, so $f v \in U$. Since it is a nonzero submodule of a simple module, $U = V$.

Proposition 2.3.4: Let $R =\mathbb{C}[G]$ and let $V, W$ be irreducible $G$-modules that are finite-dimensional as complex vector spaces. Then \[ \dim_{\mathbb{C}} \; \operatorname{Hom}_{\mathbb{C}[G]} (V, W) = \left\{ \begin{array}{ll} 1 & \text{if $V \cong W$ as $G$-modules;}\\ 0 & \text{otherwise.} \end{array} \right. \]

Proof. (Click to Expand/Collapse)

We may also use Schur's Lemma to complement Theorem 2.3.1 with a uniqueness result. If $V$ is a finite-dimensional complex vector space, we may find a real vector space $V_0 \subset V$ such that $V = V_0 \oplus i V_0$. Indeed, choose a basis $x_1, \cdots, x_d$ of $V$, and let \[ V_0 =\mathbb{R}x_1 \oplus \cdots \oplus \mathbb{R}x_d . \] Now if $v \in V$ we may write (uniquely) $v = v_0 + i v_0'$ with $v_0$ and $v_0'$ in $V_0$. Define $c (v) = v_0 - i v_0'$. We call $V_0$ a real structure on $V$, and $c$ the conjugation relative to this real structure.

Proposition 2.3.5: Let $V$ be an irreducible, finite-dimensional $G$-module. Then any $G$-invariant two inner products on $V$ are proportional.

Since $\left\langle v, v \right\rangle > 0$ for $v \in V$, the constant of proportionality is positive.

Proof. (Click to Expand/Collapse)

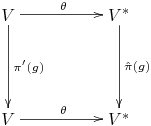

We will show that given an inner product on $V$ we can construct an intertwining operator $\theta : V \longrightarrow V^{\ast}$ that intertwines the representations $\pi'$ on $V$ and $\hat{\pi}$ on $V^{\ast}$. That is, we have a commutative diagram:

|

To check the commutativity of this diagram, consider \begin{eqnarray*} ( \hat{\pi} (g) \theta (v)) (x) & = & \\ \theta (v) \pi (g)^{- 1} x = \left\langle \pi (g)^{- 1} x, c (v) \right\rangle & = & \\ \left\langle x, \pi (g) c (v) \right\rangle = \left\langle x, c \pi' (g) v \right\rangle & = & \theta (\pi' (g) v) (x) . \end{eqnarray*} Since this is true for all $x \in V$, we have $\hat{\pi} (g) \theta (v) = \theta \pi' (g) (v)$, and since this is true for all $v \in V$, the diagram commutes.

One may check that $\pi'$ and $\hat{\pi}$ are both irreducible representations (Exercise 2.3.4), and so by Schur's Lemma, $\theta$ is determined up to constant multiple. Hence the inner product is determined up to constant multiple.

Exercise 2.3.4: Complete the last proof by showing that $\pi'$ and $\hat{\pi}$ are both irreducible.

The irreducible representations will play the same role in the representation theory of nonabelian groups as the linear characters in the case of abelian groups.

Let $(\pi, V)$ be an irreducible representation. We choose a $G$-invariant inner product on $V$ (Theorem 2.3.1). Let $\mathcal{R}_V$ be the vector space spanned by functions on $G$ of the form $f_{v, w} : V \longrightarrow \mathbb{C}$, where for $v, w \in V$ we define

| \[ f_{v, w} (g) = \left\langle \pi (g) v, w \right\rangle . \] | (2.3.3) |

The reason for the term "matrix coefficient'' can be understood as follows. Using Proposition 2.3.1 we may find an orthonormal basis $v_1, \cdots, v_D$ of $V$. Now let us represent $\pi (g)$ as a matrix \[ \pi (g) = \left(\begin{array}{ccc} \pi_{11} (g) & \cdots & \pi_{1 d} (g)\\ \vdots & & \vdots\\ \pi_{d 1} (g) & \cdots & \pi_{d d} (g) \end{array}\right) \] with respect to this basis. This means that $\pi (g) v_j = \sum_i \pi_{i j} (g) v_i$. We will show that

| \[ \left\langle \pi (g) v_j, v_i \right\rangle = \pi_{i j} (g) . \] | (2.3.4) |

Spoiler Warning! We will eventually show that the functions $\sqrt{d} \pi_{i j} (g)$ are orthonormal. and that taking the union of these over all the isomorphism classes of irreducible representations of $G$ gives an orthonormal basis of $L^2 (G)$.

We will find an orthonormal basis of $L^2 (G)$ from among the matrix coefficients, where as before $L^2 (G)$ is the space of functions on $G$, with the inner product

| \[ \left\langle \alpha, \beta \right\rangle_2 = \frac{1}{|G|} \sum_{x \in G} \alpha (x) \overline{\beta (x)} . \] | (2.3.5) |

Proposition 2.3.6: (Schur Orthogonality I.) Let $V$ and $W$ be simple $G$-modules, and let $\alpha \in \mathcal{R}_V$, $\beta \in \mathcal{R}_W$. If $V \ncong W$ then $\alpha, \beta$ are orthogonal in $L^2 (G)$.

This is the first of several results that go under the umbrella called Schur orthogonality.

Proof. (Click to Expand/Collapse)

Therefore assume $\alpha = f_{v_1, v_2}$ and $\beta = f_{w_1, w_2}$. We assume that $\left\langle \alpha, \beta \right\rangle \neq 0$, and we will construct a $G$-module isomorphism $\phi : V \longrightarrow W$. Specifically, define \[ \phi (x) = \frac{1}{|G|} \sum_{g \in G} \left\langle \pi (g) x, v_2 \right\rangle \sigma (g)^{- 1} w_2, \hspace{2em} x \in V. \]

Let us show that this is $G$-equivariant. We have, for $h \in G$ \[ \phi (\pi (h) x) = \frac{1}{|G|} \sum_{g \in G} \left\langle \pi (g h) x, v_2 \right\rangle \sigma (g)^{- 1} w_2 . \] We make the variable change $g \longmapsto g h^{- 1}$, so $\sigma (g)^{- 1} \longmapsto \sigma (h) \sigma (g)^{- 1}$, and we see that this equals \[ \frac{1}{|G|} \sum_{g \in G} \left\langle \pi (g h) x, v_2 \right\rangle \sigma (h) \sigma (g)^{- 1} w_2 = \sigma (h) \phi (x) . \] Thus $\phi$ is equivariant, so by Schur's Lemma it is either zero or an isomorphism. To see that it is nonzero, we note that \begin{eqnarray*} \left\langle \phi (v_1), w_1 \right\rangle = \frac{1}{|G|} \sum \left\langle \pi (g) v_1, v_2 \right\rangle \left\langle \sigma (g)^{- 1} w_2, w_1 \right\rangle & = & \\ \frac{1}{|G|} \sum \left\langle \pi (g) v_1, v_2 \right\rangle \left\langle w_2, \sigma (g) w_1 \right\rangle & = & \\ \frac{1}{|G|} \sum \left\langle \pi (g) v_1, v_2 \right\rangle \overline{\left\langle \sigma (g) w_1, w_2 \right\rangle} & = & \left\langle f_{v_1, v_2}, f_{w_1, w_2} \right\rangle \end{eqnarray*} which is nonzero; so $\phi$ cannot be the zero map.

Now we know that representative functions for nonisomorphic irreducible $G$-modules are orthogonal. We need a complementary result for $G$-modules that are isomorphic. But if the modules are isomorphic, we may as well assume that they are the same.

Proposition 2.3.7: (Schur Orthogonality II.) Let $V$ be an irreducible $G$-module. Then there exists a positive constant $d (V)$ such that for $v_1, v_2, w_1, w_2 \in V$ we have \[ \left\langle f_{v_1, v_2}, f_{w_1, w_2} \right\rangle_2 = \frac{1}{d (V)} \left\langle v_1, w_1 \right\rangle \overline{\left\langle v_2, w_2 \right\rangle} . \]

Note that on the left-hand side, the inner product is the one on $L^2 (G)$, and on the right-hand side, the inner product is the one in $V$. We will eventually prove that $d (V) = \dim (V)$.

Proof. (Click to Expand/Collapse)

Lemma 2.3.2: Suppose that $V$ is a finite-dimensional Hilbert space, and let $\Lambda : V \longrightarrow \mathbb{C}$ be any linear functional. Then there exists a unique vector $v \in V$ such that $\Lambda (x) = \left\langle x, v \right\rangle$.

Proof. (Click to Expand/Collapse)

Still, the map $v \longmapsto \lambda_v$ is $\mathbb{R}$-linear, and $\dim_{\mathbb{R}} (V) = 2 \cdot \dim_{\mathbb{C}} (V) = 2 \cdot \dim_{\mathbb{C}} (V^{\ast}) = \dim_{\mathbb{R}} (V^{\ast})$. As a linear transformation of real vector spaces of the same dimension, it will be surjective if and only if it is injective. To see that it is injective, we observe that $\lambda_v (v) = \left\langle v, v \right\rangle > 0$ if $v \neq 0$, so $\lambda_v$ is not the zero map unless $v = 0$.

There exists a representation $\rho : G \longrightarrow \operatorname{GL} (L^2 (G))$, which is the action by right translation. Specifically, if $f \in L^2 (G)$ and $g \in G$, then

| \[ (\rho (g) f) (x) = f (x g) . \] | (2.3.6) |

Exercise 2.3.5: Check that the inner product (2.3.5) is invariant with respect to the regular representation. We recall that this means $\left\langle \rho (g) f_1, \rho (g) f_2 \right\rangle_2 = \left\langle f_1, f_2 \right\rangle_2$.

Let us consider how the right regular representation affects matrix coefficients.

Lemma 2.3.3: Let $\pi : G \longrightarrow \operatorname{GL} (V)$ be a representation, where $V$ is given a $G$-invariant inner product. If $v, w \in V$, then $\rho (g) f_{v, w} = f_{\pi (g) v, w}$.

Proof. (Click to Expand/Collapse)

Lemma 2.3.4: Suppose that $f \in L^2 (G)$ is orthogonal to the matrix coefficients of all irreducible representations. Then $f = 0$.

Proof. (Click to Expand/Collapse)

Evaluation at the identity is a linear functional $\phi \longmapsto \phi (1)$ on $W_0$. By Lemma 2.3.2, there exists a vector $\xi \in W_0$ such that $\phi (1) = \left\langle \phi, \xi \right\rangle_2$ for all $\phi \in W_0$. Now consider that \[ \left\langle \rho (g) f, \xi \right\rangle_2 = \rho (g) f (1) = f (1 \cdot g) = f (g) . \] We see that $f$ itself is a matrix coefficient of the irreducible representation of $G$ on $W_0$. Since $f$ is nonzero, it is not orthogonal to itself, which is a contradiction since it was assumed to be orthogonal to all matrix coefficients.

The following theorem gives a nearly complete description of an orthonormal basis of $L^2 (G)$.

Theorem 2.3.3: The finite group $G$ has only finitely many isomorphism classes of irreducible representations. If $V_1, \cdots, V_h$ are representatives of these isomorphism classes (so that any irreducible $G$-module is isomorphic to $V_i$ for some unique $i$), then $L^2 (G) = \bigoplus_i \mathcal{R}_{V_i}$. The spaces $\mathcal{R}_{V_i}$ are mutually orthogonal, so if we exhibit an orthonormal basis of $\mathcal{R}_{V_i}$ for each $i$, the union of these is an orthonormal basis of $L^2 (V)$. The dimension of $\mathcal{R}_{V_i}$ is $D_i^2$, where $D_i = \dim (V_i)$. Let $v_1, \cdots, v_{D_i}$ be an orthonormal basis of $V_i$ with respect to a $G$-invariant inner product on $V_i$. Then with $d_i = d (V_i)$ as in Proposition 2.3.7, the $D_i^2$ quantities $\sqrt{d_i} f_{v_k, v_l}$ are an orthonormal basis of $\mathcal{R}_{V_i}$.

Eventually we will prove that $d_i = D_i$ (Proposition 2.4.5).

Proof. (Click to Expand/Collapse)

Proposition 2.3.8: Let $G$ act on $\mathcal{R}_{V_i}$ by the right regular representation. Then as $G$-modules \[ \mathcal{R}_{V_i} \cong V_i \oplus \cdots \oplus V_i \hspace{2em} \text{($\dim (V_i)$ copies.)} \]

Proof. (Click to Expand/Collapse)

Proposition 2.3.9: If $V_1, \cdots, V_h$ are representatives of the irreducible isomorphism classes of $G$ and $D_i = \dim (V_i)$, then \[ \sum_i D_i^2 = |G|. \]

Proof. (Click to Expand/Collapse)

We recall that the group algebra $\mathbb{C}[G]$ is, like $L^2 (G)$, the vector space of complex valued functions on $G$. But whereas $L^2 (G)$ is an inner product space, $\mathbb{C}[G]$ is a ring. In view of these different algebraic structures, we will use different notations to denote them. We can rewrite the identity $L^2 (G) = \bigoplus_i \mathcal{R}_{V_i}$ in the form \[ \mathbb{C}[G] = \prod_i \mathcal{R}_{V_i}, \] and this is appropriate since we will see that this is actually a direct product of rings. After all, we discussed in Section 1.3 that the Cartesian product of rings has the universal property of the product, but not the universal property of the coproduct, so it is now more appropriate to use $\prod$ instead of $\bigoplus$. In the Exercises, you will show that $\mathcal{R}_{V_i}$ is a two-sided ideal, and that $\mathcal{R}_{V_i}$ is a ring isomorphic to $\operatorname{Mat}_{D_i} (\mathbb{C})$, so \[ \mathbb{C}[G] \cong \prod_i \operatorname{Mat}_{D_i} (\mathbb{C}) . \]

Exercise 2.3.6: Let $V$ be an irreducible $G$-module. Show that the convolution of two matrix coefficients is computed by the formula \[ f_{t, u} \ast f_{v, w} = \frac{\left\langle t, w \right\rangle}{d (V)} f_{v, u} . \]

Exercise 2.3.7: Show that if $f_1$ and $f_2$ are matrix coefficients for two nonisomorphic irreducible $G$-modules, then $f_1 \ast f_2 = 0$.

Exercise 2.3.8: Prove that $\mathcal{R}_V$ is a two-sided ideal in $L^2 (G)$, and that it has the structure of a ring isomorphic to $\operatorname{Mat}_D (\mathbb{C})$. Hint: choose an orthonormal basis $v_1, \cdots, v_d$ of $V$. Every element of $\mathcal{R}_V$ can be written uniquely in the form \[ d (V) \sum_{i, j} a_{i j} f_{v_j, v_i} . \] Map this to the matrix $(a_{i j})$.